In today's article, we'll discuss load balancers, a software that keeps all servers running without seeing a funny and terrible 504 error.

Load balancer

A network device or software that distributes incoming network traffic across multiple servers, services, or endpoints is known as a load balancer. In addition, optimize resource utilization, maximize throughput and reduces response times and ensures high availability and fault tolerance of the system.

Some time ago, I worked at a company where a load balancer is a hardware device placed at a data center to provide VPS, like a tesseract "so powerful, solving all scaling problems... no 😂."

Nowadays, I deal with load balancer software, and I couldn't figure a hardware load balancer outside of cloud providers' data centers.

Load balancers use algorithms to route requests and handle them. Round-robin, IP hash, and least response time are the most common algorithms.

Round-robin algorithm was chosen, because there is simple scheduling when each ready task runs turn by turn on a cyclic queue for a limited time slice.

Working with two servers, the first time I hit the first server, the second time I hit the second server, the third time I hit the first server....

Next, I will describe two well-known players that could be used as load balancers, such as HAProxy and NGINX.

HAProxy

Is a free, open-source load balancer and proxy server software that provides TCP and HTTP-based applications with high availability, load balancing, and proxying. It is commonly used to distribute incoming traffic across multiple servers, improving web application performance and availability.

Here are a few examples of how HAProxy can be used:

- Load balancing: can be used to distribute incoming traffic across multiple web servers, improving web application performance and scalability. Avoiding overloading any one server.

- High availability: HAProxy can be set up to monitor web server health and redirect traffic to healthy servers. This ensures that web applications can still be accessed even if one or more servers fail.

- SSL termination: can be used to terminate SSL connections and decrypt traffic before passing it on to web servers. This can help web servers offload the encryption workload, improving performance and scalability.

- Reverse proxy: to expose internal web servers to the public internet. This can help protect web servers from direct access and improve security.

- TCP proxying: also be used to proxy TCP connections, such as for database connections or other non-web-based applications, as RabbitMQ I intend to write about this in future posts. This can also help with application performance and scalability.

It's worth noting that HAProxy works with Kubernetes, DockerSwarm, Nomad, and is the default proxy for OpenShift.

NGINX

NGINX is a web server that can also be used as a reverse proxy, load balancer, mail proxy and HTTP cache.

I wrote an article about NGINX and you can see how strong NGINX is.

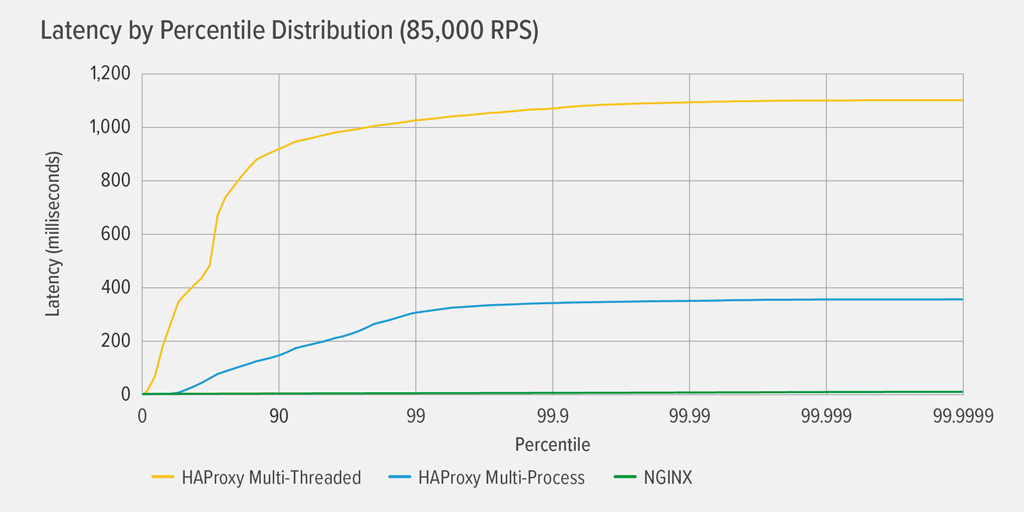

NGINX can also be used in some cases, but it's less performant than HAProxy. Here's a latency comparison.

⚠️ Less performant does not mean "not worth it"; NGINX load support is acceptable in too many cases.

More information can be found in the NGINX article.

So, after the introduction, let's get started on the implementation.

Show me the code

Web Application

This time I chose ASP.NET to be our web conternized application. To demonstrate the load balancer in action, the implementation counts server hits and displays the hostname as response.

Please copy these assets to prepare the web application.

💡 Don't forget to main directory structure.

- aspnet-hit-counter/aspnet-hit-counter.csproj

- aspnet-hit-counter/Program.cs

- aspnet-hit-counter/appsettings.json

- aspnet-hit-counter/Dockerfile

HAProxy

haproxy/haproxy.cfg

global, starts the global section;daemon, makes the process fork into background;defaults, defines default directives frontends, backends or listen;timeout, defines in milliseconds timeout for connection, client e server;frontend, defines name, default port binds, and default backends;backend, defines servers and balance algorithm;

NGINX

- nginx/nginx.conf

server, defines ports, hostname and route locations;locationdefines the URI path and proxy settings;upstream, defines servers and weight for load balance decides how to load the server;

Containers

Now we have two compose files with ☝🏾 load balancer and ✌🏾 web applications.

- haproxy.docker-compose.yml

- nginx.docker-compose.yml

Working together

It's now time to run containers. The exposed ports will be 8000 (HAProxy) and 8001 (NGINX); please use your favorite terminal to run the following command:

As a result, the animation below shows the behavior of alternating hits to servers while balancing the same request numbers.

Removing containers

That's all

If you prefer, you can clone the repository

The most effective way to learn is to first learn how load balancers work and how to use them in your local development. Cloud providers provide load balancer implementations that are simple and clear to set up, so I suggest you start there.

Your kernel 🧠 was released, bug-free, and working fast. God bless you and bye🔥.